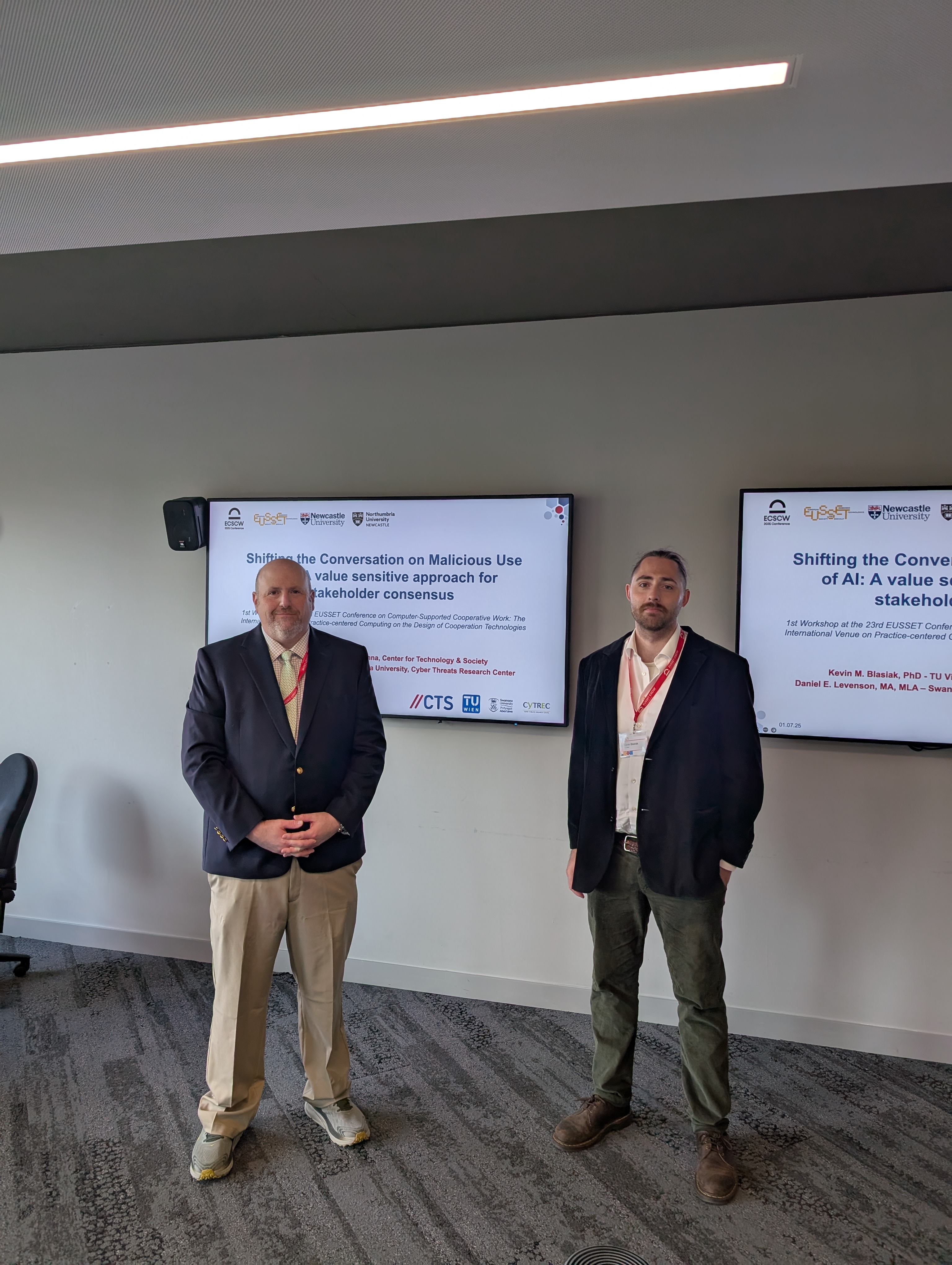

At the 2025 European Conference on Computer-Supported Cooperative Work (ECSCW) in Newcastle, UK, Kevin M. Blasiak (TU Wien, CTSi, circle.responsibleComputing) & Daniel E. Levenson (Swansea University) hosted the workshop Shifting the Conversation on the Malicious Use of AI: A Value Sensitive Approach for Stakeholder Consensus

Der interdfisciplinary workshop (available at Proceedings of the 23rd EUSSET Conference on Computer Supported Cooperative Work) brought together researchers, policymakers, law enforcement innovators, and tech sector experts, the workshop explored how emerging AI technologies—particularly generative AI—are being adapted by extremist and terrorist actors, and what ethical, practical, and governance responses might look like.

Purpose and Origins

This workshop grew out of the TASM 2024 Sandpit in Swansea, where early discussions revealed a pressing need for a multistakeholder forum focused on the dark side of AI adoption. Funded by CYTREC (Swansea University) and supported by the Center for Technology-driven Social Innovation (CTSi) at TU Wien, the ECSCW workshop offered a structured setting for collaborative dialogue and practical design work grounded in Value Sensitive Design (VSD).

Our goal was to identify key risks, ethical tensions, and potential interventions that could inform both policy and platform-level governance, especially in fields such as trust and safety, criminal justice, and AI ethics.

Who Was in the Room?

The workshop gathered around ten participants from a diverse range of sectors. Organizational leadership and expertise came from:

- - The United Nations Office on Drugs and Crime (UNODC), specializing in global crime prevention and justice innovation

- - The Swiss Federal Office of Communications (OFCOM), responsible for international digital policy

- - KISPER, the innovation lab of the German Federal Police

- - Microsoft, with representatives working at the intersection of AI ethics and responsible AI deployment

- - Tech Against Terrorism, providing operational threat intelligence on extremist misuse of digital platforms

- - Ofcom Ireland and several European research institutions, offering regulatory and academic perspectives

This diversity was critical in surfacing the systemic, cross-sectoral nature of the problem and the corresponding need for interdisciplinary approaches.

How We Worked

The day began with a detailed threat landscape briefing from Tech Against Terrorism, outlining how generative AI is already being exploited to produce multi-language propaganda, automate recruitment, create deepfakes, and evade platform moderation systems. This was followed by a series of short lightning talks that set the tone for critical reflection: Who benefits from AI right now? Who is left vulnerable? And how can these dynamics be shifted?

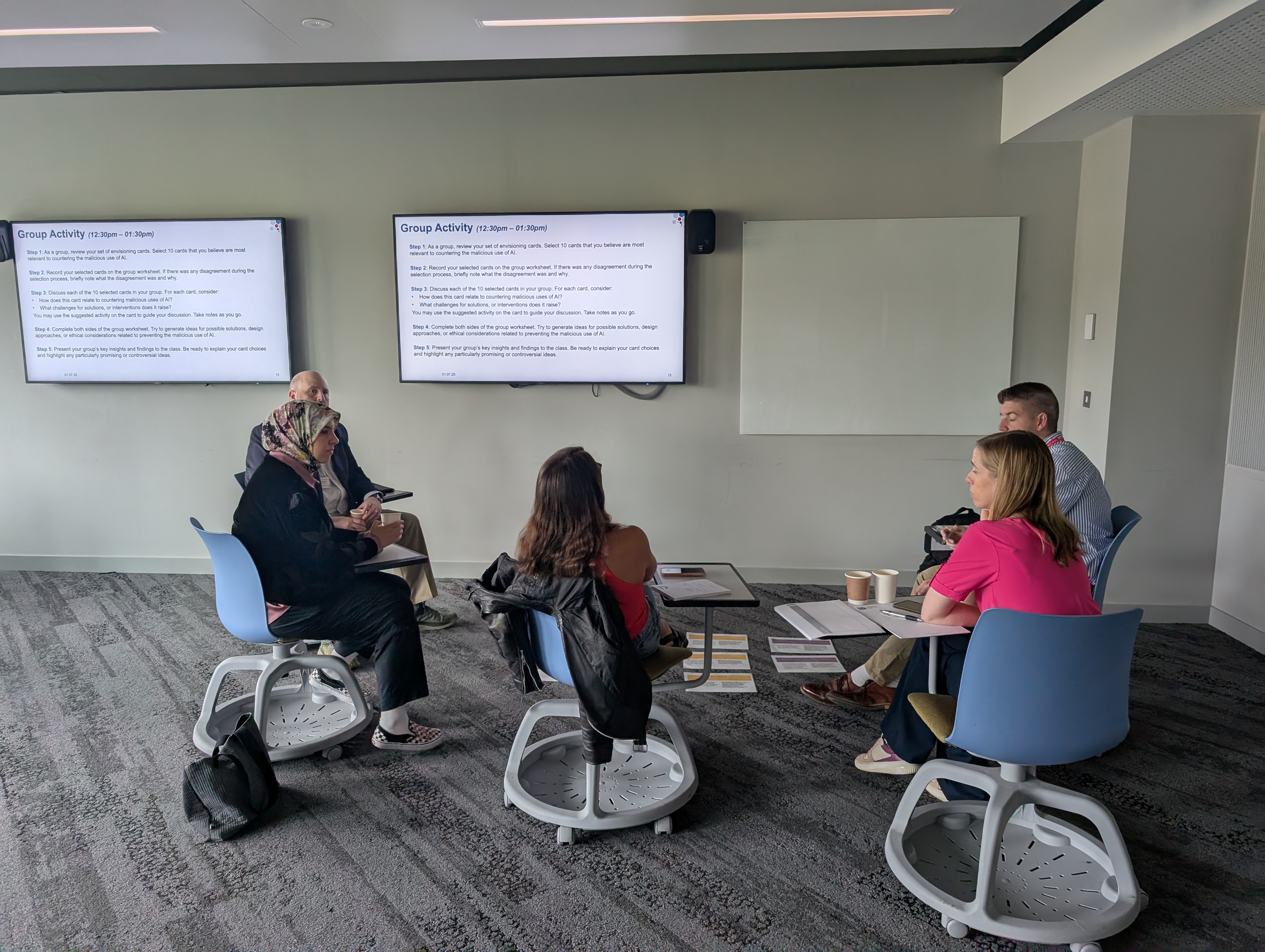

Participants then moved into small breakout groups, using the Envisioning Cards—a toolkit developed by the University of Washington’s Value Sensitive Design Lab—to structure discussion around stakeholder impacts, value tensions, and long-term consequences. The cards selected by participants reflected some of the workshop’s core themes: Adaptation, Crossing National Boundaries, Erosion of Trust, Future Stakeholders, Political Realities, Reimagining Infrastructure, Obsolescence, and Choosing Desired Values.

Key Themes and Takeaways

The discussions revealed several converging concerns:

- - Extremist adaptation is outpacing governance. From coded language to synthetic media, terrorist actors are already exploiting generative AI to expand reach, personalize messaging, and evade detection. Existing regulatory and moderation tools are struggling to keep up.

- - There is hype, but also substance. While concerns about AI can at times feel overblown, workshop participants emphasized that the threat is real, particularly in how AI lowers the barrier to producing high-impact, hard-to-detect content.

- - Governance must be piecemeal but principled. One policy expert compared the situation to the historical regulation of engines—society never had one “engine law” but developed thousands of targeted rules over time. Similarly, we need a constellation of norms, laws, and safeguards tailored to different use cases, sectors, and risks.

- - The absence of key actors is felt. Notably, social media platforms and other large AI companies were underrepresented. While Microsoft’s participation helped ground the conversation, future efforts need stronger involvement from the full range of actors shaping public-facing AI.

- - Multistakeholder collaboration is not optional. Shifting AI governance toward societal benefit—rather than purely commercial gain—will require closer, sustained cooperation between states, companies, researchers, and civil society. Transdisciplinary, country-level initiatives could offer one path forward.

From Reflection to Action

The workshop closed with a commitment to continue this conversation. A follow-up report is in preparation, and we plan to present extended findings and recommendations during the Terrorism and Social Media (TASM) Conference in Swansea in 2026.

Participants agreed that while perfect solutions may not exist, better alignment between values, regulations, and technological infrastructures is both necessary and achievable, especially when informed by real-world insight and mutual learning across sectors.

We thank the Cyber Threats Research Centre - CYTREC at Swansea University for financial support that enabled practitioner participation, and the Center for Technology-driven Social Innovation (CTSi) at TU Wien for workshop sponsorship. Finally, we are grateful to all participants for their expertise, criticality, and willingness to engage across disciplinary and institutional boundaries.